Technology Article

Sensible 4’s Positioning – How Our Autonomous Vehicles Know Where They’re Going

Positioning and obstacle detection are the two foundations of autonomous driving.

All driving relies on positioning. If a vehicle doesn’t know its location, it can’t place itself on a map or know where the road or the planned route goes. Without location, driving would be impossible.

On the other hand, position and route data won’t be of much use if the vehicle isn’t able to recognize obstacles in its path, such as a pedestrian on a sidewalk or a red light at the corner of an intersection. The AI methods have to be aware of all these details in order to make autonomous driving possible.

Visualization of our positioning and mapping above shows our autonomous GACHA vehicle driving in Pasila region, Helsinki, during FABULOS pilot. You can see bridge, some traffic signs, buildings and trees in the video as the vehicle drives along.

Positioning Requires Accuracy and Reliability

The more precise and reliable positioning we need, the harder it is to accomplish. For example, satellite positioning pinpoints a location down to a few meters at best. Local blind spots, such as tunnels or the area between tall buildings can introduce significant uncertainty to positioning accuracy.

However, the accuracy of satellite positioning can be improved with a dedicated correction signal. The most common correction technique is called RTK (Real Time Kinetic GPS), which can provide up to centimeter-level accuracy for positioning.

However, reliance on infrastructure is an inherent problem in all satellite positioning. Even RTK isn’t enough to improve positioning in satellite blind spots. That’s why companies developing autonomous driving are turning to independent technologies for positioning. This is at the core of Sensible 4’s know-how: improving positioning independently of weather or infrastructure.

“Our positioning technology is based on laser scanners, or LiDAR technologies”, says Sensible 4’s CTO Jari Saarinen. These sensors are designed for laser scanning, providing a 3D model of the vehicle’s surroundings. Positioning can be derived from other sensor data too, such as RTK satellite positioning or radar data.

“Laser scanners on the roof are a common site on various autonomous driving prototypes. They see their surroundings day and night, but susceptibility to poor weather is a key issue”, Saarinen notes.

The beam emitted by the scanner doesn’t travel through a drop of water, mist, or a grain of sand carried in the wind, creating deviations and noise for the laser-scanned 3D model. Many autonomous driving prototypes grind to a halt in poor weather.

Dealing With a Massive Amount of Data

The more accurate the scanner, the more information it produces. The increase in information enables more accurate conclusions, but at a high cost: the demand for processing power increases significantly while requiring much more expensive sensors. The powerful equipment also requires more electricity and more cooling while taking up more space.

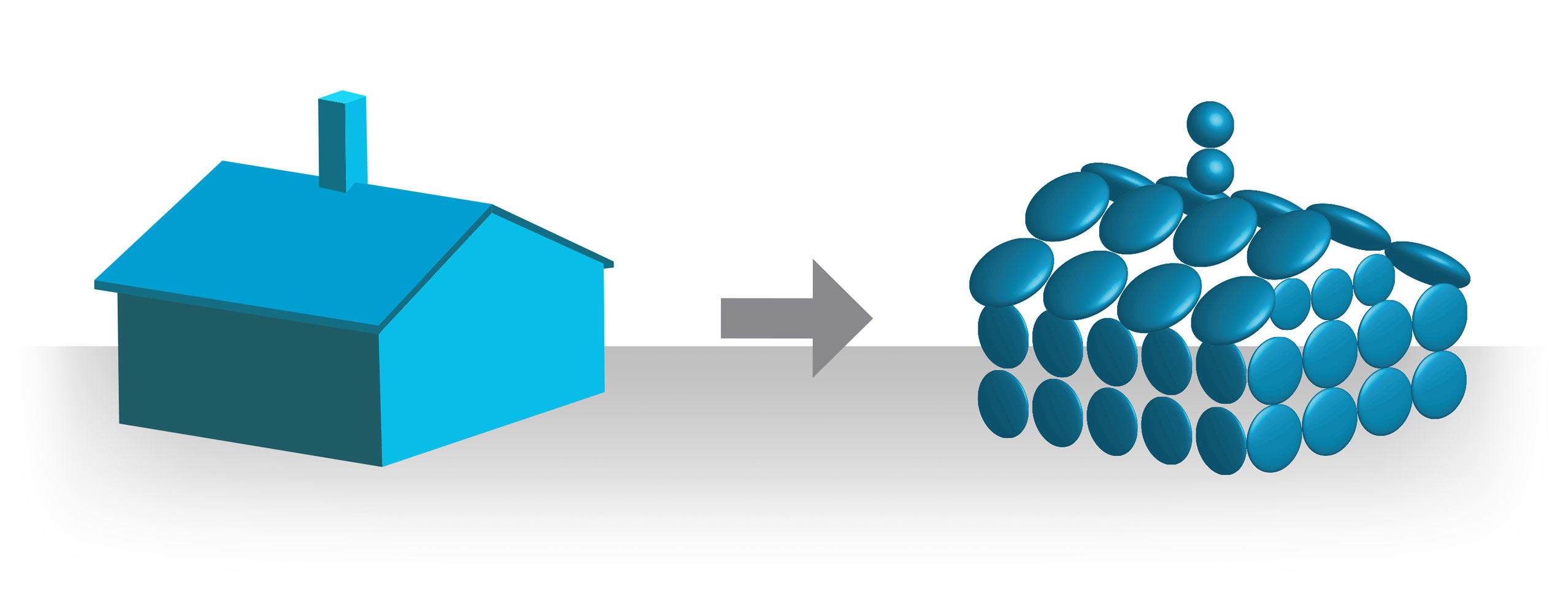

Sensible 4’s positioning technology takes a different approach to data produced by laser scanners. Instead of trying to constantly remodel the surroundings as a cloud of points, it builds a probability model of the surroundings, which streamlines processing significantly while filtering out noise such as snow or rain.

Our technology starts building the model by splitting the surroundings into sections roughly the size of a cubic meter, much like the world of cubes that is Minecraft. The laser-scanned points in each cube are generalized computationally into a normally distributed probability distribution, or ellipsoids, which is relatively easy to manage for mathematic processing. For the autonomic vehicle’s computer, the world consists of such distributions, as does the map stored in the vehicle. And by comparing the two, the vehicle can calculate its position in the real world.

Sensible 4 Means Better Processing

Objects close to the vehicle, such as nearby cars, trees or buildings, can produce thousands of data points per second. Reducing these objects into ellipsoids cuts the amount of data up to 90% while effectively filtering out noise such as rain, dust storms, snow on the ground, or stray leaves. This means a regular, passively cooled, computer is all that is required for real-time calculation of position. No large GPU units are needed.

When snow covers the ground and the sky in the winter, the shape of the measured world changes to match the conditions. Sensible 4’s winter tests have shown that probability modelling-based positioning is reliable even when weather conditions mask over 50% of the laser scan data.

It’s also worth pointing out that the probability model is only used for positioning. Small objects and details have no relevance for positioning. Obstacle detection is carried out by a fusion of sensors, so making very broad generalizations about the surrounding world seen by the vehicle poses no security risks.

“Even though determining the position of the vehicle is based on probabilities, it’s reliable and accurate down to just a few centimeters”, Saarinen concludes. “And this is repeatable – under any weather conditions.”