Technology Article

Obstacle Detection and Tracking System (ODTS) Enables a Smooth, Safe Ride – for Everyone

An autonomous vehicle relies on two systems to understand its surroundings; the Positioning System and the Obstacle Detection and Tracking System (ODTS). Having discussed positioning in an earlier article, we’ll now focus on ODTS.

The two systems share sensors, as well as having some of their own dedicated ones. They both require processing power and software, and the vehicle Control and Planning system that drives the vehicle receives input from both.

Identifying Objects Is Central to Predictive Driving

If a traffic light turns red in the distance, you’d expect the traffic to start slowing down or come to a complete stop. Or, if you see a pedestrian approaching a crossing with purpose, you would probably conclude that they’re likely to cross. Anticipating or reading traffic like this is essential for safe driving, whether there’s a human or a computer behind the wheel.

“For an autonomous vehicle, identifying and classifying objects is the first step towards this kind of predictive driving,” says Hamza Hanchi, Autonomous Vehicle Engineer at Sensible 4. Detecting and tracking obstacles is the primary focus for Hamza and his team.

For example, a concrete barrier on the side of the road is unlikely to jump into the driving lane, whereas a human might. A car parked on the side of the road is also a potential dynamic object, as it may pull away or someone might step out of the car at any moment.

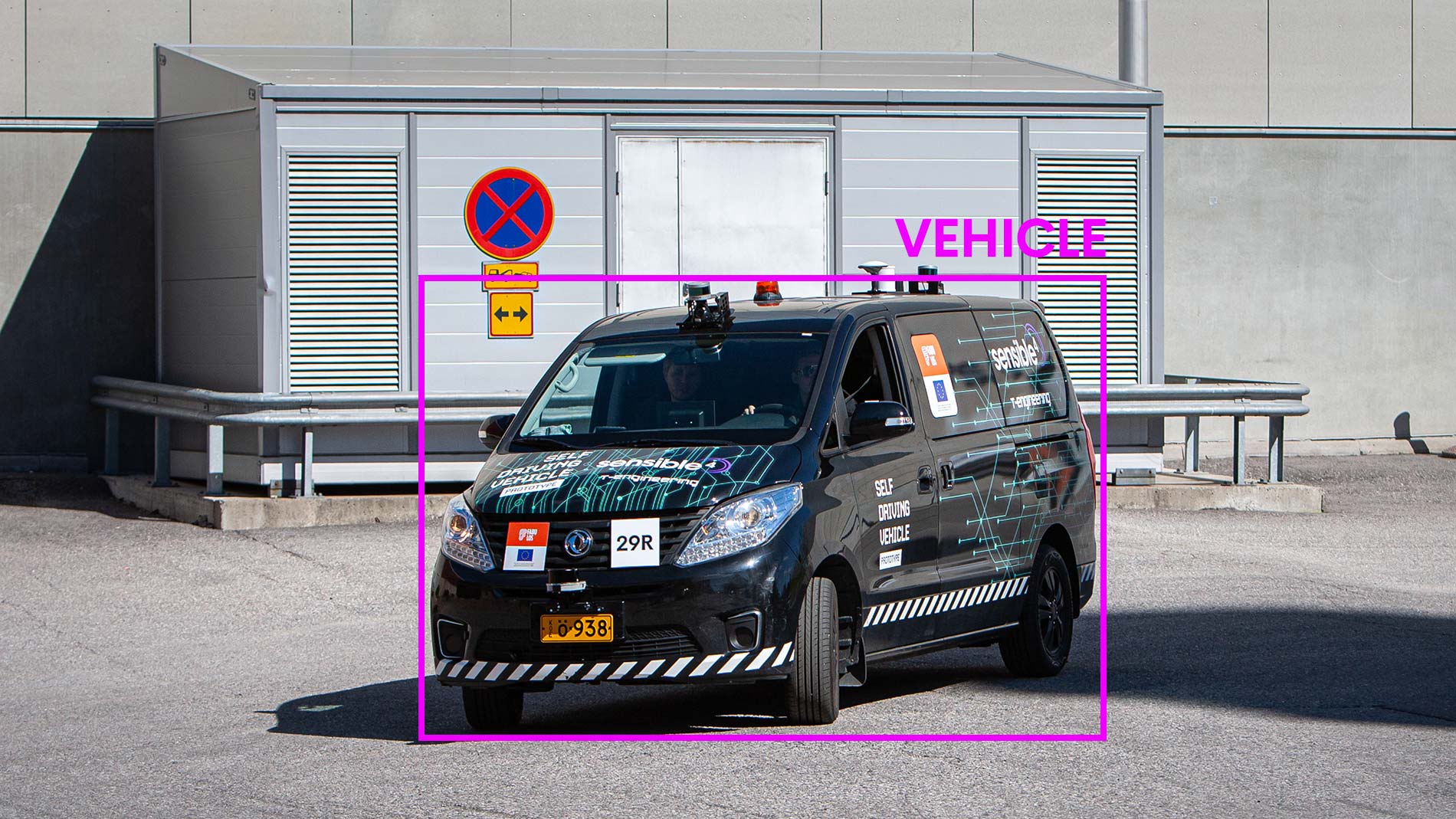

Using multiple sensors, the autonomous vehicle identifies and classifies surrounding objects including pedestrians and other vehicles. This is a prerequisite for safe maneuvering in traffic.

First part of the visualization describes the world as autonomous vehicle is seeing it based on laser scanner (LIDAR) -data. Second part is captured from Pasila FABULOS-pilot of spring 2020 in Helsinki using the Dongfeng (T-Engineering) CM7 vehicle.

Three Sensors Working Together

There are three sensor types that are primarily used for scanning the vehicle’s surroundings and, when it comes to identifying objects, the camera is the most important.

“With cameras, we can detect and identify other road users using neural net machine vision. These cameras include regular visible light cameras and various thermal cameras,” notes Hanchi.

Cameras are able to observe the terrain with a high level of detail, and they’re also relatively cheap. However, they have trouble dealing with challenging lighting conditions such as darkness or glare from the sun when it shines directly at them from the horizon. Cameras also don’t work very well in particularly poor weather and they struggle to measure the size and distance of an object accurately.

Another important sensor is the laser scanner, which is also the primary device for vehicle positioning. When positioning, results from the measurements taken are generalised as probability distributions, but for object identification more accurate point data is required. The laser scanner measures object size and distance with high accuracy, but it’s not that good in identifying distant objects.

Radar Measures Relative Speed

In addition to the sensors mentioned above we also use radio frequency radars, which are ideal for detecting other vehicles in all weather and lighting conditions as well as spotting pedestrians and other unprotected road users. However, radar’s resolution and ability to identify objects don’t match those of laser scanners or cameras.

On the other hand, radar has the unique ability of measuring an object’s speed relative to the autonomous vehicle. This is something cameras and laser scanners cannot do, as they can only measure an object’s location at a specific point in time; while they can estimate object direction and speed by comparing locations over time, this requires a reliable series of measurements.

This ability of radar is utilised in adaptive cruise control (ACC), which is commonly used in the automotive industry. For ACC to function, it needs to know whether the distance to the vehicle in front is decreasing or increasing.

As we can see, no single sensor identifies objects reliably in all conditions. For the best results, a number of sensors must work together to provide results that can be analysed as a unit – this is called sensor fusion.

Autonomous Vehicles See Into the Future

ODTS can track the movements of identified objects and then classify them, for example as vehicles or pedestrians. A vehicle rarely makes a sudden change in direction, whereas a pedestrian is more likely to do so. A cyclist, on the other hand, moves much faster than a pedestrian and might be riding on the road or on the pavement. A cyclist is also more likely to change direction than a car. The direction and speed of an object, along with classification data, are used to generate a probability model of an object’s position and trajectory two to three seconds into the future.

An autonomous vehicle, like Dongfeng CM7, electrified by T-Engineering, can likewise predict its own position very reliably. By combining trajectory predictions, map data and other inputs, a projection is generated of the relative positions of the vehicle and other objects around it a few seconds into the future to establish a framework for vehicle control.

Avoiding collisions is an essential function of this prediction model: if the autonomous vehicle’s trajectory is likely to cross that of another road user, the vehicle needs to slow down predictively. As a last resort, the vehicle can be brought to a complete stop if no other safe way is found to avoid a collision.

Only Fast Detection Is Useful Detection

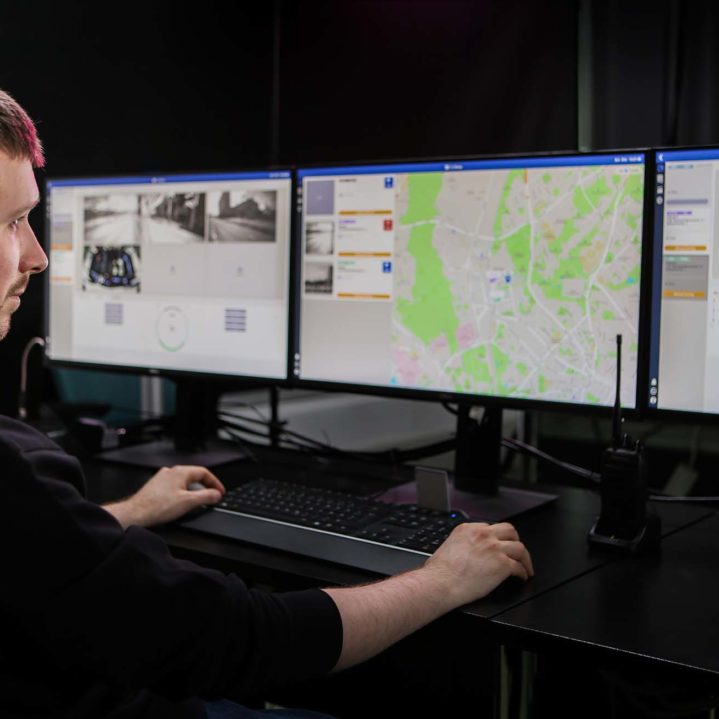

An autonomous vehicle tracks dozens, sometimes even hundreds of surrounding objects, continuously evaluating their intentions. Making these calculations fast enough to leave sufficient time to make the right steering decisions takes considerable processing power. This is a demanding task for onboard computers.

In addition to surrounding object detection and tracking, Sensible 4 vehicles also have a so-called reactive layer component. The reactive layer is tasked with scanning the vehicle’s immediate surroundings in its direction of travel and bringing the vehicle to a stop when it detects anything ahead that would prevent safe driving. The reactive layer doesn’t employ machine vision or neural nets, nor does it attempt to identify objects. It simply stops the vehicle when absolutely necessary, ensuring a fast and reliable mechanism.

In principle, an autonomous vehicle could be built on the reactive layer alone – it would drive slowly enough, stop every time the situation demanded it, and stand still until any obstacles had cleared. However, this would result in poor passenger comfort, disrupt all road users and ultimately increase traffic risks.

Obstacle detection is chiefly about maintaining traffic safety and flow and protecting both vehicles and unprotected road users. Detecting and tracking obstacles makes it possible to predict the actions of other road users, providing autonomous vehicle control computers with sufficient understanding of the situation and an accurate framework for decision-making. This enables smooth and steady travel while driving in traffic.

Ultimately, an autonomous vehicle does the same as an experienced human driver: it reads the traffic, makes predictions, and gives way when needed.