Rain and Fog Challenge the Sensors

Autonomous Vehicles and the Bad Weather

Practically all development in autonomous driving wrestles with the random and complex element that continues to challenge even the most experienced of engineers: the weather.

Weather has a huge effect on traffic safety. In the United States alone, there are roughly 1,2 million traffic accidents each year – over three thousand accidents every day – where bad weather plays a significant part.

Different Sensors Function in Different Ways in Different Conditions

According to the NHTSA (National Highway Traffic Safety Administration), the most common weather-related issues that lead to accidents are rain and wet road surface.

Frozen roads actually pose a much smaller problem than wet road, mainly because the season for potential road freezing is much shorter and areas affected are smaller. Not surprisingly, rain and fog are the main obstacles for autonomous vehicle sensors to play with.

An autonomous vehicle sees the world through various sensors, typically cameras, laser scanners (so-called LiDAR sensors), and radars. Weather conditions pose problems particularly for sensors operating in visible light frequencies, i.e. cameras and LiDAR sensors.

Fog and snow obstruct the camera’s view, much like they make it difficult for a human driver to see ahead. The thicker the fog or more intense the rain, the harder it is to identify objects in the distance.

Also varying lighting conditions compromise camera performance. In the dark, cameras mostly have to rely on the vehicle’s lights or streetlights. In daylight, if the sun is close to the horizon or the terrain is covered by snow, cameras may be blinded by the brightness or lack of any sort of objects in the view.

Various objects can also look quite different under varying lighting conditions, making it all the more difficult for machine vision to operate.

LiDAR Works in the Fog – Aided by Software

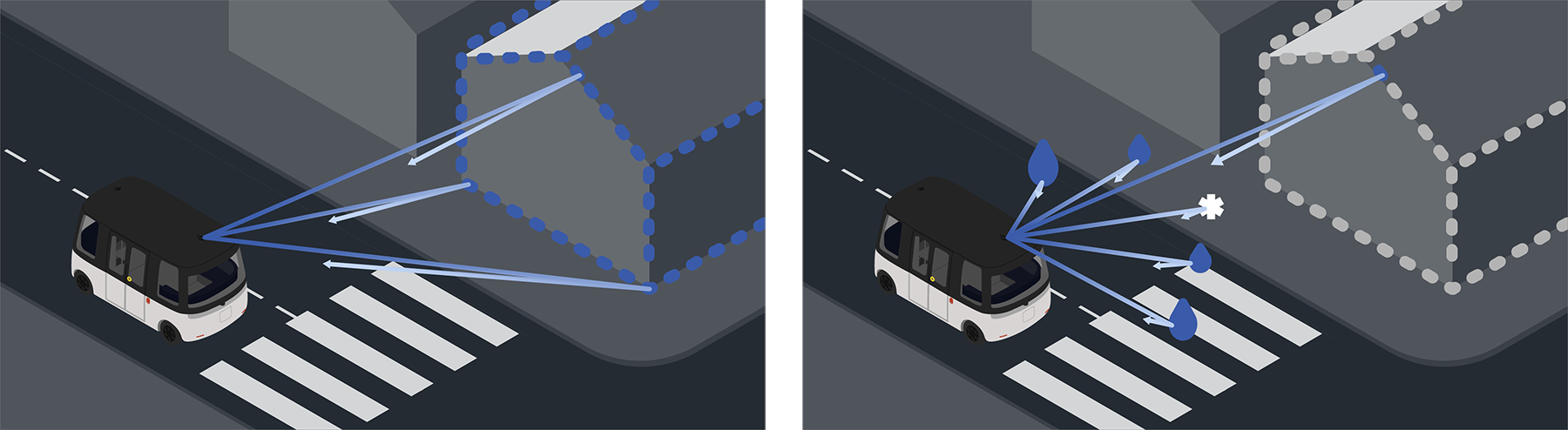

LiDAR sensors transmit laser light and measure its reflections. As a result, LiDAR sensors produce a real-time 3D model of its surroundings.

Rain, fog, autumn leaves, or other debris carried by the wind distort the 3D image rendered by the LiDAR sensor. This type of interference can generate random, erroneous observations of the environment, making it more difficult to measure actual objects.

LiDAR sensors, by design, produce massive amounts of data. The devices may, in fact, measure up to a million points per second. The sheer amount of LiDAR data produced is useful, as it provides great opportunities for computational error correction of the data. For example, raindrops or blowing sand do not stay put, unlike traffic signs or buildings.

LiDAR sensor weather-proofing is a challenge that can, in many cases, be solved through advanced software. For example, Sensible 4’s positioning algorithm – measuring the shape of terrain and buildings surrounding the vehicle – works even when over 50% of the measured information is distorted by weather.

The greatest strength of LiDAR sensors is their independence of lighting conditions. LiDAR works equally well at night and during the day, and they do not get blinded by sunlight from the horizon or shining banks of snow.

Radar Sees Through Rain and Dust

The third sensor type, radar, is primarily weather-independent. The radar’s ability to measure through rain and clouds is commonly used in land vehicles, seafaring, aviation, and space technology. Radars have been in commercial use in vehicles for years, and the technology is now proven.

The primary issue with radars is the difficulty in interpreting measured information. Radars work best when measuring large, moving metallic objects, i.e. determining the position and direction of other vehicles. Therefore, radars are commonly used when implementing Adaptive Cruise Control systems. Detecting other common road users, such as pedestrians and bikers, is considerably more challenging for radars.

Radar’s ability to measure through rain and fog, however, is such a significant benefit that they are quickly becoming an integral part of all autonomous driving. Still, interpreting the radar image remains a challenge: an aluminum can on a sidewalk may give out significantly stronger signal than a person standing next to it.

The latest advances in radar technology are in the field of neural networks. While artificial intelligence (AI) can be taught to interpret images produced by a camera, it can similarly be taught to make interpretations based on radar data. AI research is advancing rapidly, and with the continuous development of computers AI is likely to play an even larger role in autonomous driving in the future.